Aws cloud based engineering interview questions 2024

- Aws cloud based engineering interview questions

- 1) What is a cloud? Please explain SAAS, PAAS, and IAAS (Aws cloud based engineering interview questions)

- 2) What is the relation between the Availability Zone and Region? (Aws cloud based engineering interview questions)

- Relationship Between Availability Zone (AZ) and Region in Cloud Computing

- Region

- Availability Zone (AZ)

- Key Relationships

- 3) Explain AWS IAM. Describe AAA (authentication, authorization, and accounting) (Aws cloud based engineering interview questions)

- AAA (Authentication, Authorization, and Accounting)

- Why AWS IAM is Critical

- 4) How do you upgrade or downgrade a system with near-zero downtime?(Aws cloud based engineering interview questions)

- Blue-Green Deployment

- Rolling Updates

- Canary Deployment

- General Steps for Near-Zero Downtime Upgrades/Downgrades

- Q5) What is a DDoS attack, and what services can minimize them?(Aws cloud based engineering interview questions)

- What is a DDoS Attack?

- Services to Minimize DDoS Attacks

- Q6) What is the difference between snapshot, image, and template? (Aws cloud based engineering interview questions)

- Snapshot

- Image

- Template

- When to Use Each:

- Q7) How will you create an auto-scaling group in AWS? Explain the purpose of creating an auto-scaling group in AWS. (Aws cloud based engineering interview questions)

- Creating an Auto Scaling Group in AWS

- Purpose of Creating an ASG

- Steps to Create an Auto Scaling Group

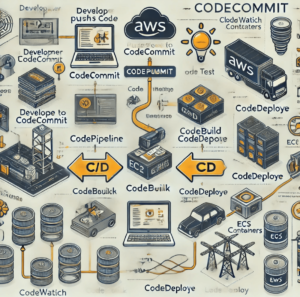

- Q8) What is CICD. Please configure AWS Codecommit. Please provide details with Diagram and flow of implementation. (Aws cloud based engineering interview questions)

- What is CI/CD?

- Steps to Configure AWS CodeCommit

- Q9) What Is Amazon Virtual Private Cloud (VPC) and Why Is It Used? What are the differences between NAT Gateways and NAT Instances?

- Why Use Amazon VPC?

- Differences between NAT Gateways and NAT Instances

- Q10) What is the difference between Amazon RDS, DynamoDB, and Redshift?(Aws cloud based engineering interview questions)

- Amazon RDS (Relational Database Service)

- Amazon DynamoDB

- Amazon Redshift (Aws cloud based engineering interview questions)

Aws cloud based engineering interview questions

1) What is a cloud? Please explain SAAS, PAAS, and IAAS (Aws cloud based engineering interview questions)

ans: In computing, cloud refers to the delivery of computing services—like servers, storage, databases, networking, software, and more—over the Internet (“the cloud”). These services are hosted on remote servers and can be accessed on demand. The cloud eliminates the need for individuals and businesses to own physical infrastructure, offering flexibility, scalability, and cost efficiency.

i) SaaS (Software as a Service):

Definition: Provides software applications over the internet.

Examples: Google Workspace, Microsoft 365, Dropbox.

Benefits: No need to install or maintain software on individual devices; automatic updates; accessibility from anywhere with an internet connection.

Key Features:

No need for installation or maintenance,Automatic updates,Pay-as-you-go pricing.

ii) PaaS (Platform as a Service):

Definition: Offers a platform allowing customers to develop, run, and manage applications without dealing with the underlying infrastructure.

Examples: Google App Engine, Microsoft Azure, AWS Elastic Beanstalk.

Benefits: Simplifies application development and deployment; reduces the need to manage hardware and software layers; scalable resources.

Key Features:

Simplifies app development lifecycle, Provides middleware, development tools, and database management, Scalable environments.

iii) IaaS (Infrastructure as a Service):

Definition: Provides virtualized computing resources over the internet, such as servers, storage, and networking.

Examples: Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP).

Benefits: High level of control over the infrastructure; pay-as-you-go model; scalable and flexible resources.

Key Features:

Highly scalable,Gives control over infrastructure, Pay for what you use.

2) What is the relation between the Availability Zone and Region? (Aws cloud based engineering interview questions)

Ans:

Relationship Between Availability Zone (AZ) and Region in Cloud Computing

In cloud computing, Regions and Availability Zones (AZs) are organizational units used to provide high availability, fault tolerance, and disaster recovery capabilities. Here’s how they are related:

Region

- Definition: A Region is a geographic area that consists of multiple, physically separated data centers (Availability Zones).

- Examples:

- AWS:

us-east-1(Northern Virginia),eu-west-1(Ireland). - Azure: East US, West Europe.

- Google Cloud:

asia-south1(Mumbai),us-central1(Iowa).

- AWS:

Availability Zone (AZ)

- Definition: An Availability Zone is an isolated location within a Region, designed to be independent from other AZs in the same Region. Each AZ has its own power, cooling, and networking, and is built to offer high fault tolerance.

Purpose: To provide redundancy and high availability by isolating failures. Each AZ operates independently with its own power, cooling, and networking.

Examples: In AWS, a Region like US East (N. Virginia) could have multiple AZs labeled us-east-1a, us-east-1b, and us-east-1c.

Key Relationships

- Regions Contain Multiple AZs

- A Region is composed of at least two or more Availability Zones.

- This ensures high availability even if one AZ goes down.

- Fault Isolation

- AZs are designed to be fault-isolated.

- If one AZ experiences issues (like power outages), the others in the same Region remain unaffected.

- Data Redundancy

- Cloud providers allow replication of data and services across AZs within the same Region for redundancy and disaster recovery.

- Example: A database could have primary storage in

us-east-1aand a backup inus-east-1b.

- Low Latency Connectivity

- AZs within a Region are connected by high-speed, low-latency networking to support synchronous data replication and seamless failover.

- Structure: A Region contains multiple Availability Zones. For example, an AWS Region might have 3 to 6 AZs.

- Purpose: Using multiple AZs within a Region allows you to build highly available and fault-tolerant applications. If one AZ goes down, the others can continue to operate.

- Deployment: When deploying applications in the cloud, it is common practice to spread resources across multiple AZs to ensure resilience and minimize downtime.

By designing cloud architectures that utilize multiple AZs within a Region, businesses can ensure their applications remain available and performant, even in the face of unexpected failures or maintenance events.

3) Explain AWS IAM. Describe AAA (authentication, authorization, and accounting) (Aws cloud based engineering interview questions)

Ans:

AWS Identity and Access Management (IAM) is a service that helps you securely control access to AWS services and resources for your users. With IAM, you can create and manage AWS users and groups, and use permissions to allow and deny their access to AWS resources.

AWS Identity and Access Management (IAM) is a service that enables you to manage access to AWS resources securely. IAM allows you to:

- Create Users, Groups, and Roles: Define who can access AWS resources.

- Assign Permissions: Control what actions users and roles can perform on specific resources.

- Secure Access: Use fine-grained permissions, multi-factor authentication (MFA), and policy-based access control.

AAA (Authentication, Authorization, and Accounting)

AAA is a framework for controlling access to computer resources, enforcing policies, and monitoring usage.

1. Authentication

- Definition: The process of verifying the identity of a user or system.

- Examples: Username and password, biometric scans, multi-factor authentication (MFA).

- Purpose: Ensures that the user or system is who they claim to be.

2. Authorization

- Definition: The process of determining whether an authenticated user has permission to access a resource or perform an action.

- Examples: Role-based access control (RBAC), access control lists (ACLs), policy rules.

- Purpose: Defines what an authenticated user is allowed to do within the system.

3. Accounting

- Definition: The process of recording and monitoring the actions and activities of users within a system.

- Examples: Logging access attempts, tracking resource usage, auditing.

- Purpose: Helps in auditing, reporting, and understanding user behavior and system usage for security and compliance.

Why AWS IAM is Critical

- Ensures least privilege by granting only necessary access.

- Facilitates compliance with security standards.

- Provides audit trails for troubleshooting and accountability.

4) How do you upgrade or downgrade a system with near-zero downtime?(Aws cloud based engineering interview questions)

Ans : Upgrading or downgrading a system with near-zero downtime is crucial for minimizing disruptions while maintaining system availability. Below are the strategies and best practices to achieve this:

Blue-Green Deployment

- Set Up a New Environment: Create a new environment (the “green” environment) with the upgraded or downgraded system.

- Deploy to the New Environment: Deploy the new version of the system to the green environment.

- Test the New Environment: Thoroughly test the new environment to ensure everything is working correctly.

- Switch Traffic: Once testing is complete, switch traffic from the old environment (the “blue” environment) to the new green environment.

- Monitor: Monitor the new environment closely to ensure there are no issues.

Benefits:

- Instant rollback.

- No downtime for users during the transition.

Rolling Updates

Concept:

Update the system incrementally by deploying new versions to a subset of servers at a time.

Steps:

- Divide servers into batches.

- Take one batch offline, update it, and bring it back online.

- Move to the next batch until all servers are updated.

Benefits:

- Ensures continuous availability.

- Gradual transition helps identify issues early.

Canary Deployment

Concept:

Deploy the new version to a small subset of users first (a “canary group”) before a full rollout.

Steps:

- Deploy the new version to a small portion of servers.

- Monitor performance and user feedback.

- Gradually increase deployment if no issues arise.

Benefits:

- Limits risk exposure.

- Real-world testing on live traffic.

General Steps for Near-Zero Downtime Upgrades/Downgrades

- Open EC2 Console: Access your cloud provider’s management console (e.g., AWS EC2).

- Choose Operating System AMI: Select the appropriate AMI (Amazon Machine Image) for the new instance type.

- Launch an Instance: Launch an instance with the new instance type.

- Install Updates: Install all necessary updates and applications on the new instance.

- Test the Instance: Test the new instance to ensure it’s working correctly.

- Deploy the New Instance: Deploy the new instance and replace the older instance.

- Monitor: Monitor the new instance to ensure everything is running smoothly

Aws cloud based engineering interview questions

Q5) What is a DDoS attack, and what services can minimize them?(Aws cloud based engineering interview questions)

Ans:

What is a DDoS Attack?

A Distributed Denial of Service (DDoS) attack is a malicious attempt to disrupt the normal functioning of a server, service, or network by overwhelming it with a flood of Internet traffic.

- Distributed: The attack is carried out by multiple compromised devices (often forming a botnet) from various locations.

- Denial of Service: The target system becomes unavailable to legitimate users due to resource exhaustion (bandwidth, CPU, memory, etc.).

Services to Minimize DDoS Attacks

Several services and best practices can help minimize the impact of DDoS attacks:

- Cloud-Based DDoS Protection: Services like AWS Shield, Azure DDoS Protection, and Cloudflare offer scalable defenses that can handle large-scale attacks. These services use a network of servers around the world to absorb and mitigate malicious traffic.

- Traffic Analysis and Filtering: Tools that monitor network traffic in real-time to identify and separate legitimate requests from malicious ones. Examples include Radware DefensePro and F5 BIG-IP.

- Anycast Network Diffusion: Distributing traffic across multiple servers to absorb volumetric attacks and prevent outages. This technique helps in spreading the load and reducing the impact of an attack.

- Geolocation Filtering: Blocking or restricting traffic based on geographic origin to reduce potential attack vectors. This method limits access from regions known for high malicious activity levels.

- Load Balancing: Distributing traffic evenly across multiple servers to ensure no single server is overwhelmed. This helps in maintaining service availability even during an attack.

- Redundancy and Failover: Setting up backup systems and failover mechanisms to ensure continuous service availability in case of an attack.

- Web Application Security: Implementing security measures such as Web Application Firewalls (WAFs) to protect against application-layer attacks.

By implementing these services and best practices, organizations can significantly reduce the risk and impact of DDoS attacks, ensuring their services remain available and resilient.

Q6) What is the difference between snapshot, image, and template? (Aws cloud based engineering interview questions)

Ans :

Snapshots, images, and templates are related but serve different purposes in the context of virtualization and cloud computing. Here’s a detailed breakdown of their differences:

Snapshot

Definition:

A snapshot is a point-in-time copy of a system’s state, including its data and configuration.

Key Characteristics:

- Purpose: Primarily used for backup and recovery.

- State: Captures the current state of a disk or volume (e.g., EBS in AWS).

- Incremental: Often stores only the changes made since the last snapshot to save storage space.

- Use Case:

- Quickly roll back to a previous state.

- Safeguard data before applying updates or changes.

Examples:

- AWS: EBS Snapshot.

- Azure: Managed Disk Snapshot.

Analogy:

Think of a snapshot as a save point in a video game—you can revert to it if something goes wrong.

Image

- Definition: An image is a complete copy of a virtual machine’s disk that can be used to create new virtual machines with the same configuration and installed software.

- Usage: Used to launch new instances or virtual machines with the same operating system, applications, and configuration as the original.

- Characteristics: Contains everything needed to boot and run the system, including the operating system, installed applications, and configuration settings.

- Example: Creating an Amazon Machine Image (AMI) to launch new EC2 instances in AWS.

Template

- Definition: A template is a pre-configured file used to create virtual machines with a predefined set of resources, configurations, and software.

- Usage: Used to standardize deployments and ensure consistency across multiple virtual machines.

- Characteristics: Defines the hardware configuration (CPU, memory, storage), network settings, and sometimes the installed software and operating system.

- Example:

- AWS: Launch Template.

- Azure: Resource Manager Template.

- Google Cloud: Instance Template.

When to Use Each:

- Snapshot:

- Before making risky changes to a system (e.g., upgrades, updates).

- For regular backups of disks or volumes.

- Image:

- To create and launch pre-configured VMs.

- To standardize server setups.

- Template:

- To automate the deployment of consistent environments.

- For multi-resource configurations, including network and storage.

Q7) How will you create an auto-scaling group in AWS? Explain the purpose of creating an auto-scaling group in AWS. (Aws cloud based engineering interview questions)

Ans:

Creating an Auto Scaling Group in AWS

- Open the EC2 Console:

- Navigate to the EC2 Dashboard in the AWS Management Console.

- Create a Launch Template or Configuration:

- Under Auto Scaling, click Launch Configurations or Launch Templates.

- Create a new launch configuration or template with the desired instance type, AMI (Amazon Machine Image), key pair, security groups, and any necessary user data.

- Configure the Auto Scaling Group:

- Under Auto Scaling, select Auto Scaling Groups.

- Click Create Auto Scaling Group.

- Provide a name for the Auto Scaling group.

- Choose the launch configuration or template you created earlier.

- Select the VPC and subnets where you want the instances to run.

- Configure load balancer settings (optional) if you want to use an existing load balancer or create a new one.

- Set Group Size and Scaling Policies:

- Define the desired number of instances, as well as the minimum and maximum sizes of the group.

- Configure scaling policies to specify how the group should adjust capacity based on demand. This can include target tracking, step scaling, or scheduled scaling policies.

- Configure Notifications and Tags:

- Set up notifications to receive alerts on scaling events.

- Add tags for identification and organization of resources.

- Review and Create:

- Review your configuration settings and click Create Auto Scaling Group to finalize the setup.

Purpose of Creating an ASG

- Elasticity: Automatically scale resources up or down based on demand.

- High Availability: Ensure the application remains available even during traffic spikes or instance failures.

- Cost Optimization: Run the right number of instances for the workload at any given time.

- Redundancy: Distribute instances across multiple Availability Zones to enhance fault tolerance.

Steps to Create an Auto Scaling Group

Step 1: Create a Launch Template or Configuration

- Purpose: Defines the configuration for EC2 instances, such as AMI, instance type, key pair, security groups, and other settings.

- Navigate: Go to the AWS Management Console → EC2 → Launch Templates.

- Create Template: Provide the following details:

- AMI ID (Amazon Machine Image).

- Instance type (e.g., t2.micro).

- Key pair for SSH access.

- Security group for instance network rules.

- Block storage configurations.

Step 2: Define Auto Scaling Group

- Navigate: Go to the AWS Management Console → Auto Scaling Groups.

- Create Group: Provide the following details:

- Launch template or configuration created in Step 1.

- Name of the ASG.

- VPC and subnets (Availability Zones) where the instances will run.

- Load Balancer integration (if applicable).

Step 3: Configure Scaling Policies

- Define how the ASG should scale based on metrics or schedules:

- Target Tracking Scaling: Automatically maintain a desired metric, such as CPU utilization.

- Step Scaling: Scale in steps based on predefined thresholds.

- Scheduled Scaling: Scale based on a specific time or date.

Step 4: Set Group Size

- Specify:

- Minimum size: The least number of instances to maintain.

- Desired capacity: The number of instances to start with.

- Maximum size: The maximum number of instances to scale up to.

Step 5: Add Notifications (Optional)

- Configure notifications to receive updates on scaling activities (e.g., via SNS).

Step 6: Review and Create

- Review all settings and confirm to create the Auto Scaling Group.

Q8) What is CICD. Please configure AWS Codecommit. Please provide details with Diagram and flow of implementation. (Aws cloud based engineering interview questions)

Ans:

What is CI/CD?

CI/CD stands for Continuous Integration and Continuous Deployment/Delivery, a set of practices that automate software development processes to ensure frequent, reliable, and efficient delivery of updates.

- Continuous Integration (CI): This practice involves frequently integrating code changes into a shared repository, followed by automated builds and tests. The goal is to detect and fix integration issues early and improve code quality.

- Continuous Deployment (CD): This practice automates the deployment of code changes to production environments, ensuring that new features and fixes are delivered to users quickly and reliably.

Steps to Configure AWS CodeCommit

1. Create a CodeCommit Repository

- Navigate: AWS Management Console → CodeCommit → Create Repository.

- Enter Details: Provide a name and optional description.

- Create Repository: Note the HTTPS/SSH clone URL for future use.

2. Set Up IAM Permissions

- IAM Role/User: Create a role or user with permissions for CodeCommit.

- Policy: Attach a policy like

AWSCodeCommitFullAccessfor developers. - Credentials: Use access keys or AWS CLI to authenticate.

3. Clone the Repository

Via HTTPS: Use a username and generated credentials from IAM.

5. Configure a CI/CD Pipeline

Use AWS services like CodePipeline, CodeBuild, and CodeDeploy to automate the CI/CD process.

- CodePipeline Setup:

- Source Stage: Add CodeCommit as the source.

- Build Stage: Use CodeBuild to compile and test the code.

- Deploy Stage: Use CodeDeploy to deploy the application to an EC2 instance, ECS, or Lambda.

- CodeBuild Setup:

- Create a buildspec.yml file specifying build steps.

- CodeDeploy Setup:

- Configure deployment settings and targets

Aws cloud based engineering interview questions